If you are having a hard time accessing the Litellm page, Our website will help you. Find the right page for you to go to Litellm down below. Our website provides the right place for Litellm.

https://github.com › BerriAI › litellm

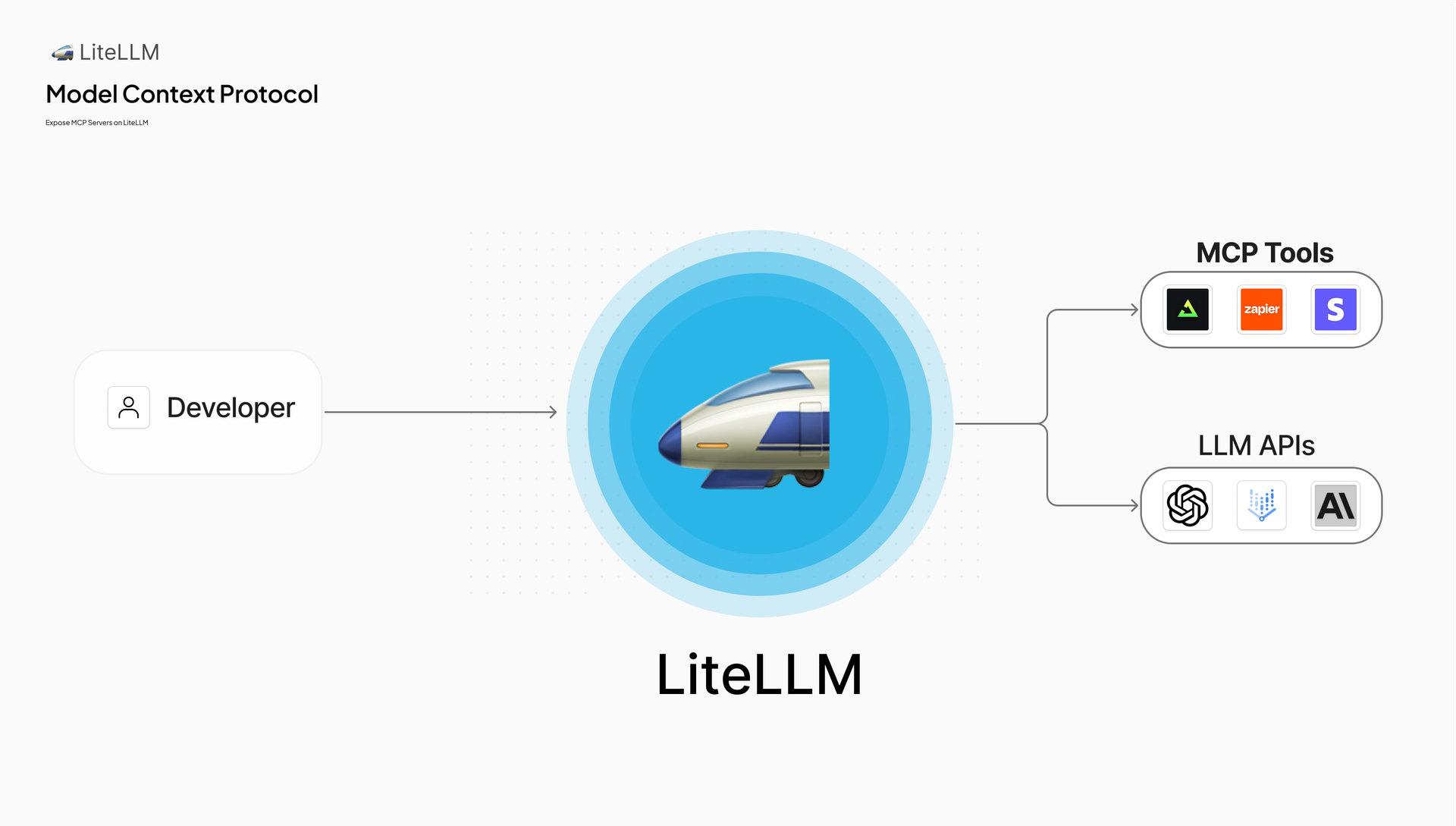

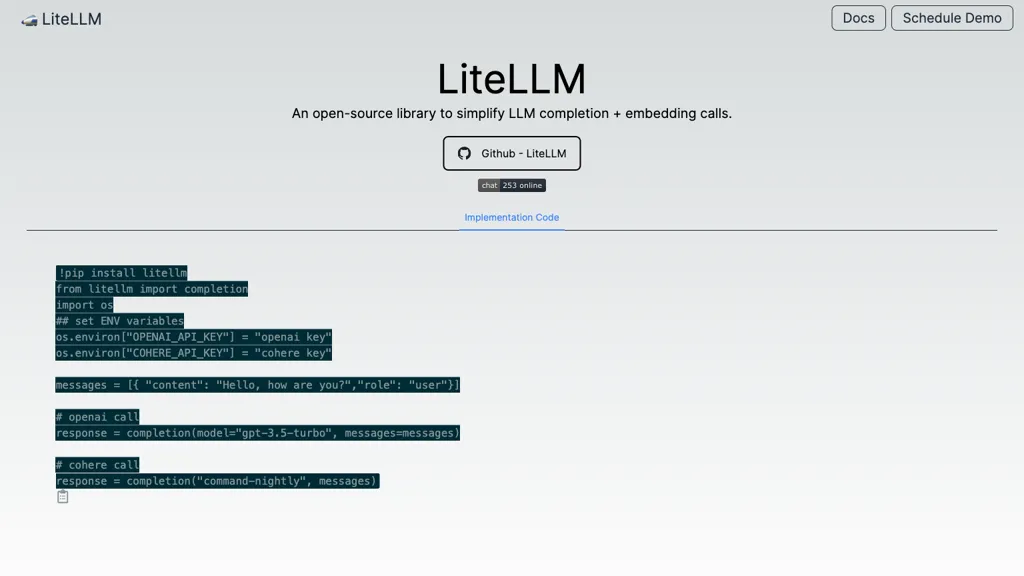

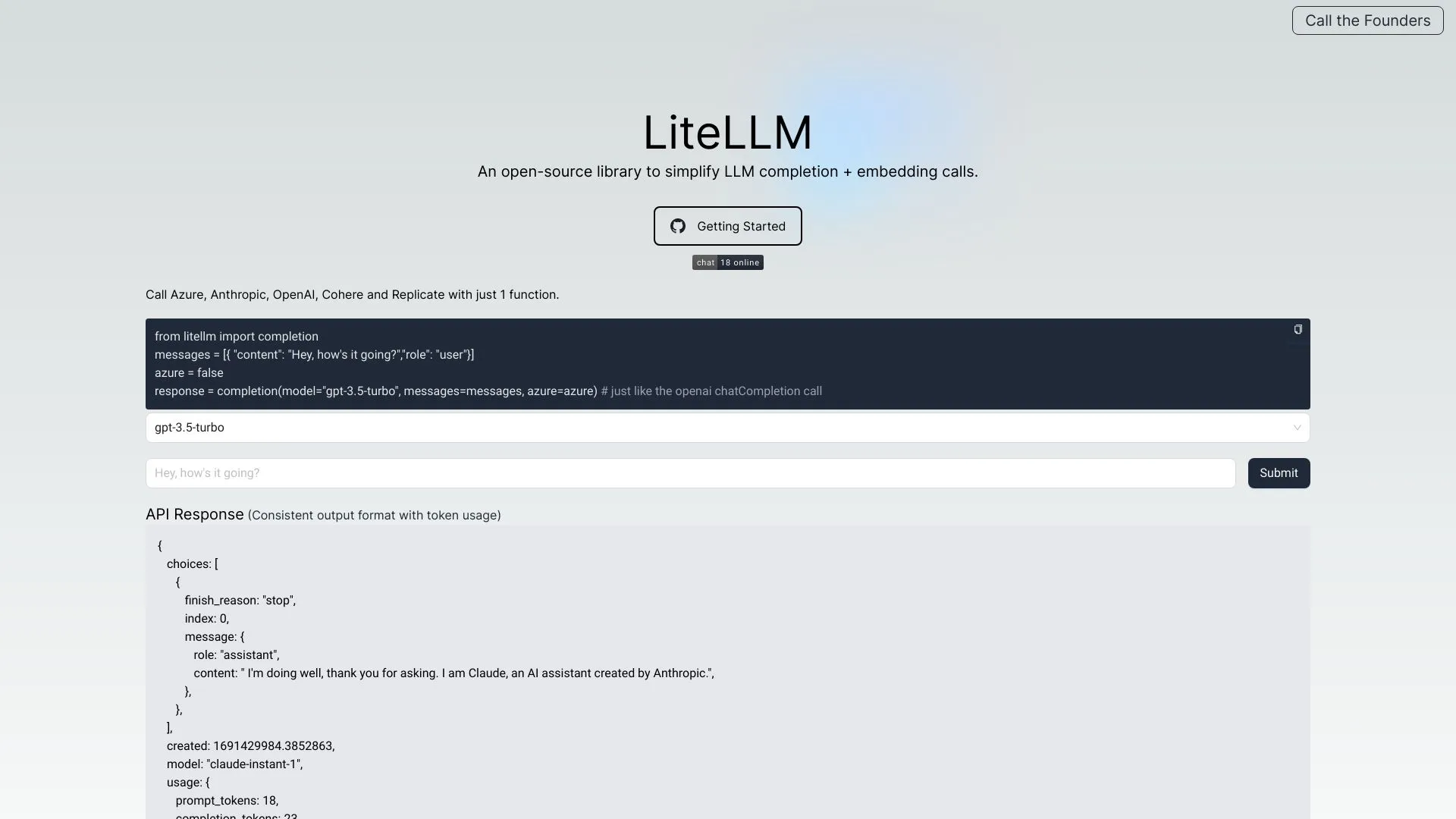

Python SDK Proxy Server AI Gateway to call 100 LLM APIs in OpenAI or native format with cost tracking guardrails loadbalancing and logging Bedrock Azure OpenAI VertexAI Cohere

https://medium.com › mitb-for-all

Born out of the illustrious Y Combinator program LiteLLM is a lightweight powerful abstraction layer that unifies LLM API calls across providers whether you re calling OpenAI

https://pypi.org › project › litellm

LiteLLM supports streaming the model response back pass stream True to get a streaming iterator in response Streaming is supported for all models Bedrock Huggingface

https://www.litellm.ai

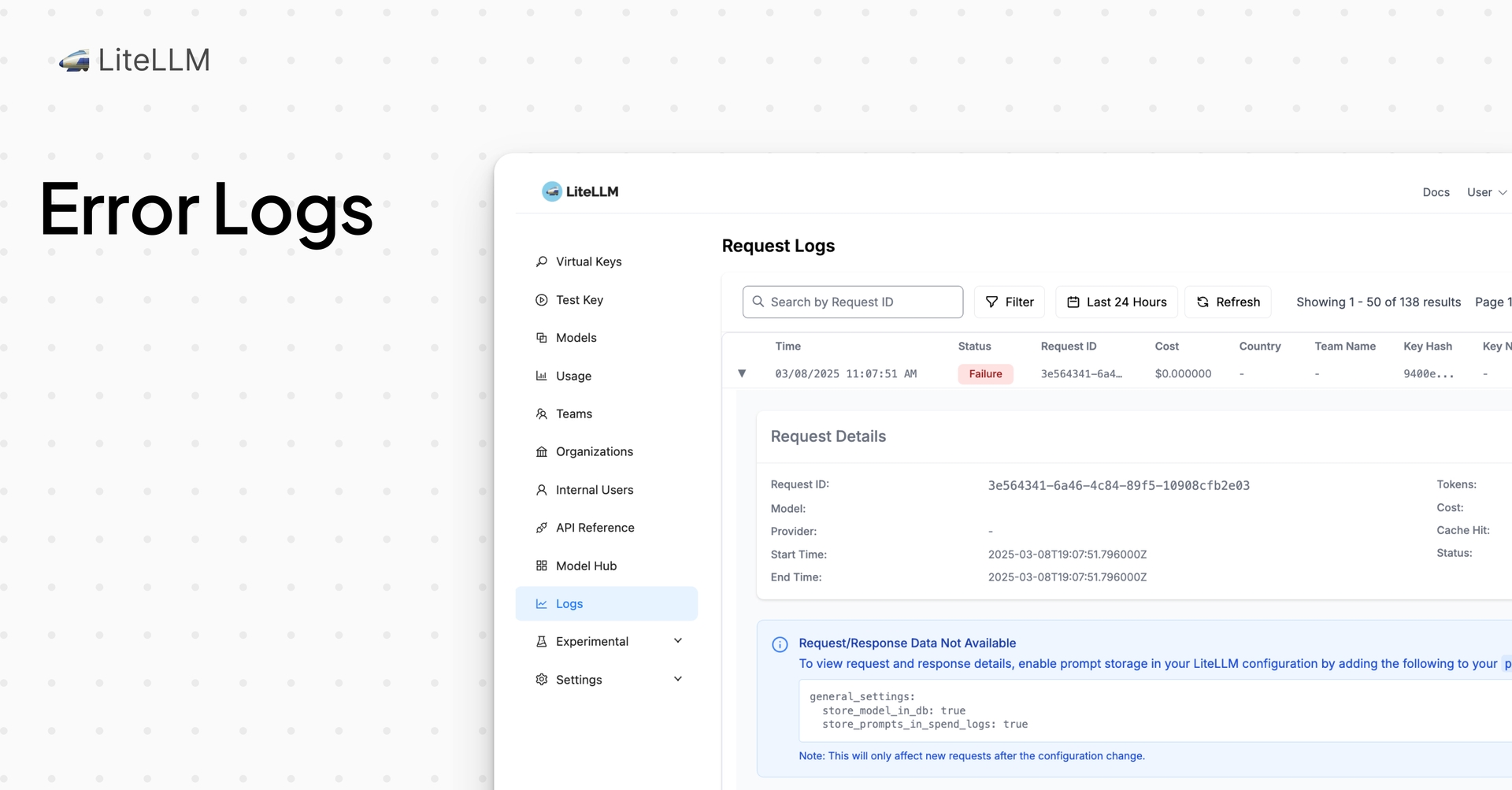

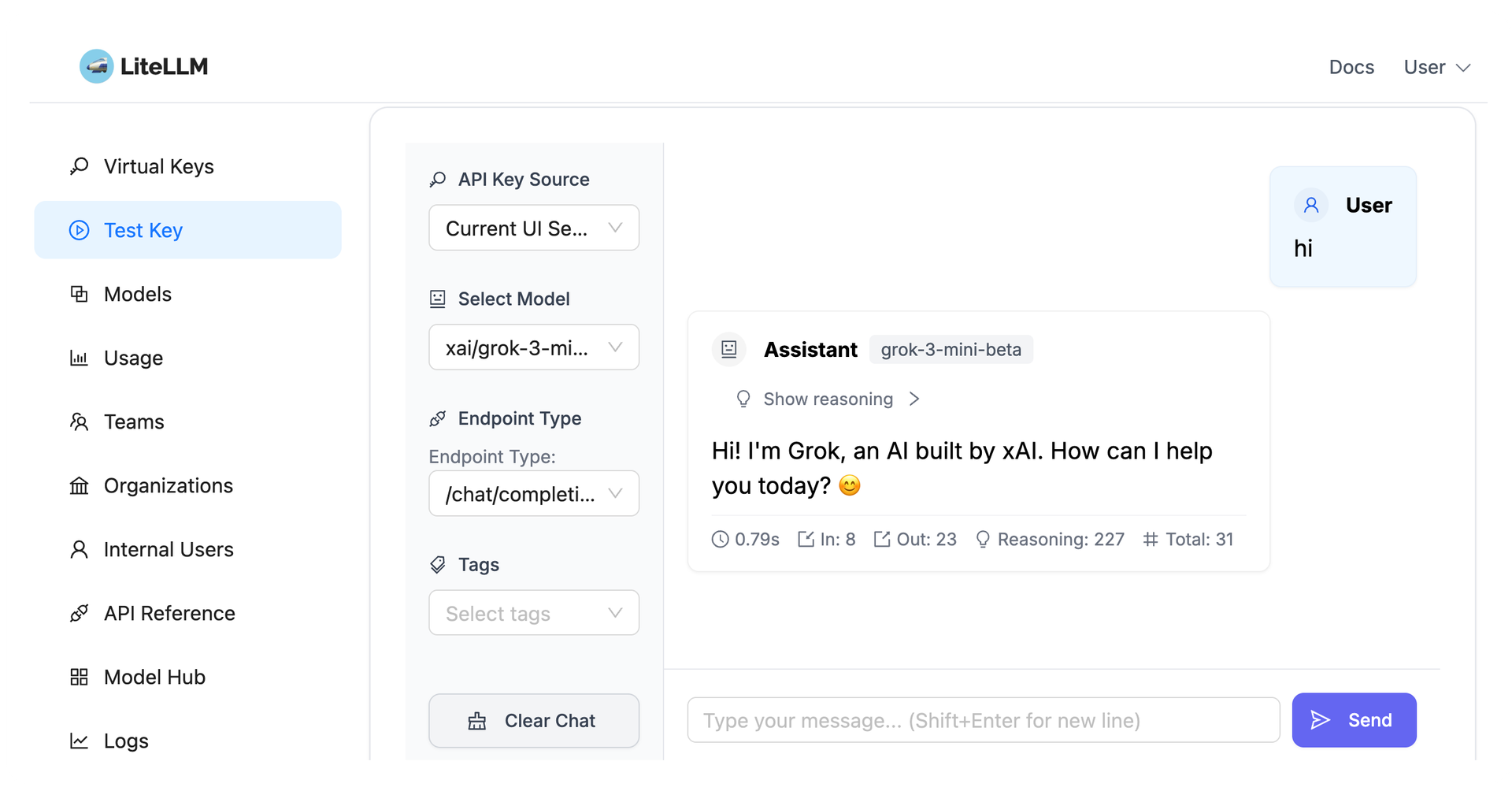

The LiteLLM proxy has streamlined our management of LLMs by standardizing logging the OpenAI API and authentication for all models significantly reducing operational complexities

https://berriai.github.io › litellm

How to use LiteLLM You can use LiteLLM through either the Proxy Server or Python SDK Both gives you a unified interface to access multiple LLMs 100 LLMs Choose the option that best fits your

https://www.youtube.com › watch

In this tutorial I have explored the fundamentals of LiteLLM an AI gateway that allows access to over 100 LLMs using the OpenAI input output format

https://www.infoworld.com › article › litellm...

LiteLLM is an open source project that tackles this fragmentation head on by providing a unified interface and gateway to call more than 100 LLM APIs using a single consistent format

https://blog.smaranjitghose.com › litellm-tutorial

LiteLLM is a powerful open source toolkit that revolutionizes how developers interact with Large Language Models LLMs Think of it as a universal translator for LLMs it allows your

https://github.com › BerriAI › litellm › blob › main › README.md

LiteLLM supports streaming the model response back pass stream True to get a streaming iterator in response Streaming is supported for all models Bedrock Huggingface TogetherAI Azure OpenAI

Thank you for visiting this page to find the login page of Litellm here. Hope you find what you are looking for!